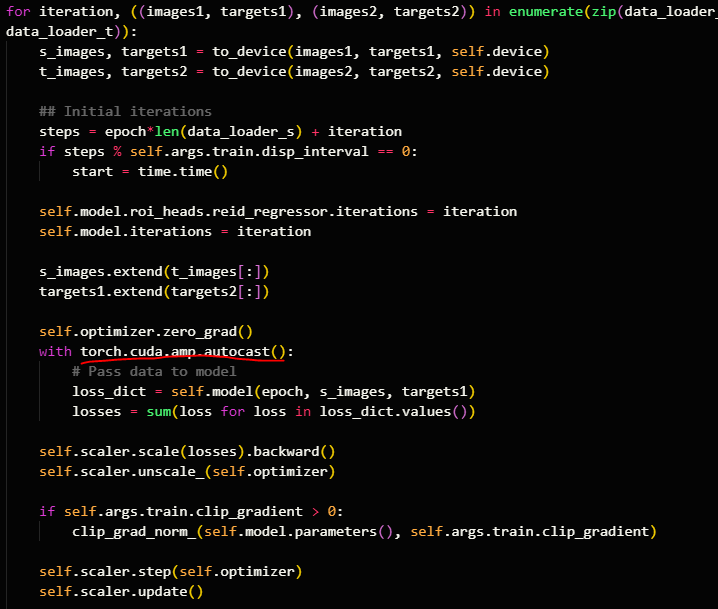

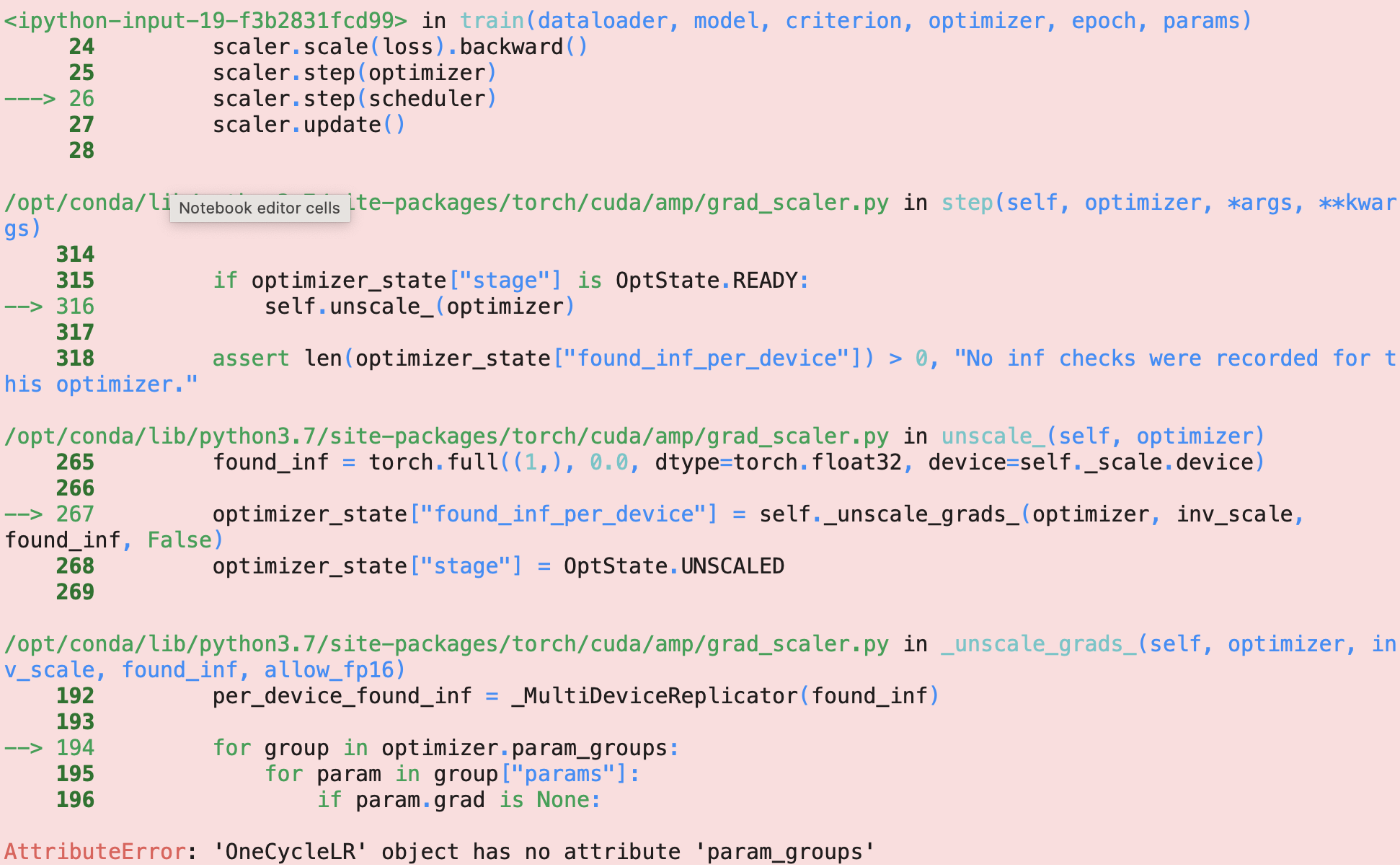

What is the correct way to use mixed-precision training with OneCycleLR - mixed-precision - PyTorch Forums

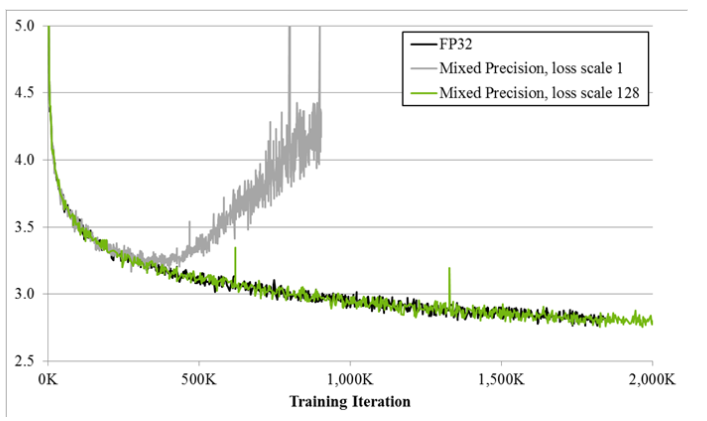

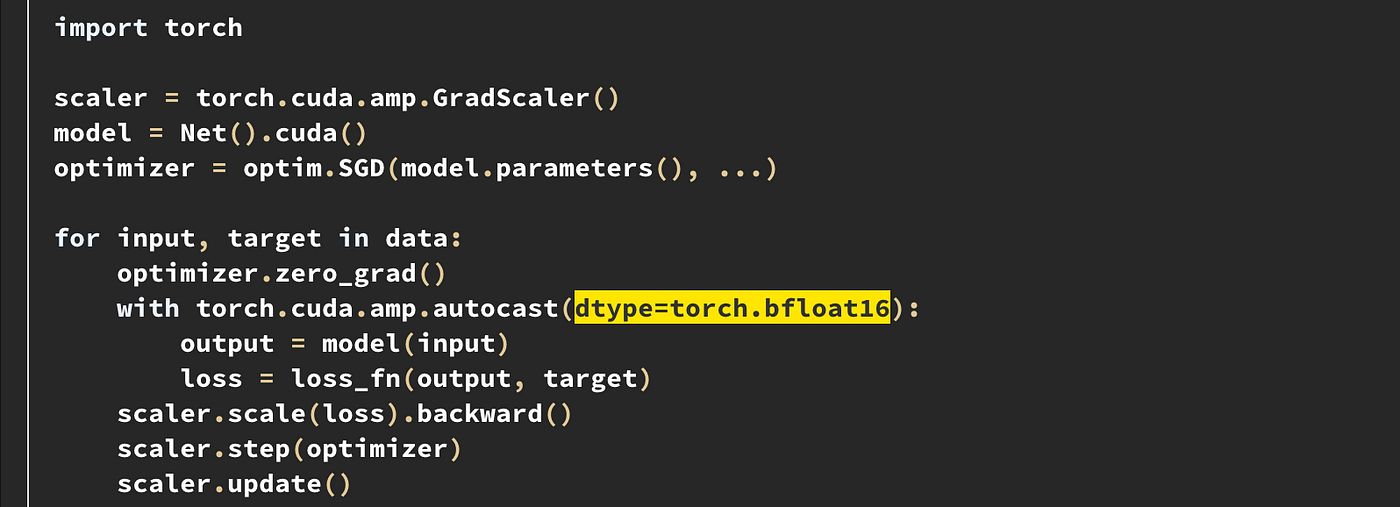

PyTorch on X: "For torch <= 1.9.1, AMP was limited to CUDA tensors using ` torch.cuda.amp. autocast()` v1.10 onwards, PyTorch has a generic API `torch. autocast()` that automatically casts * CUDA tensors to

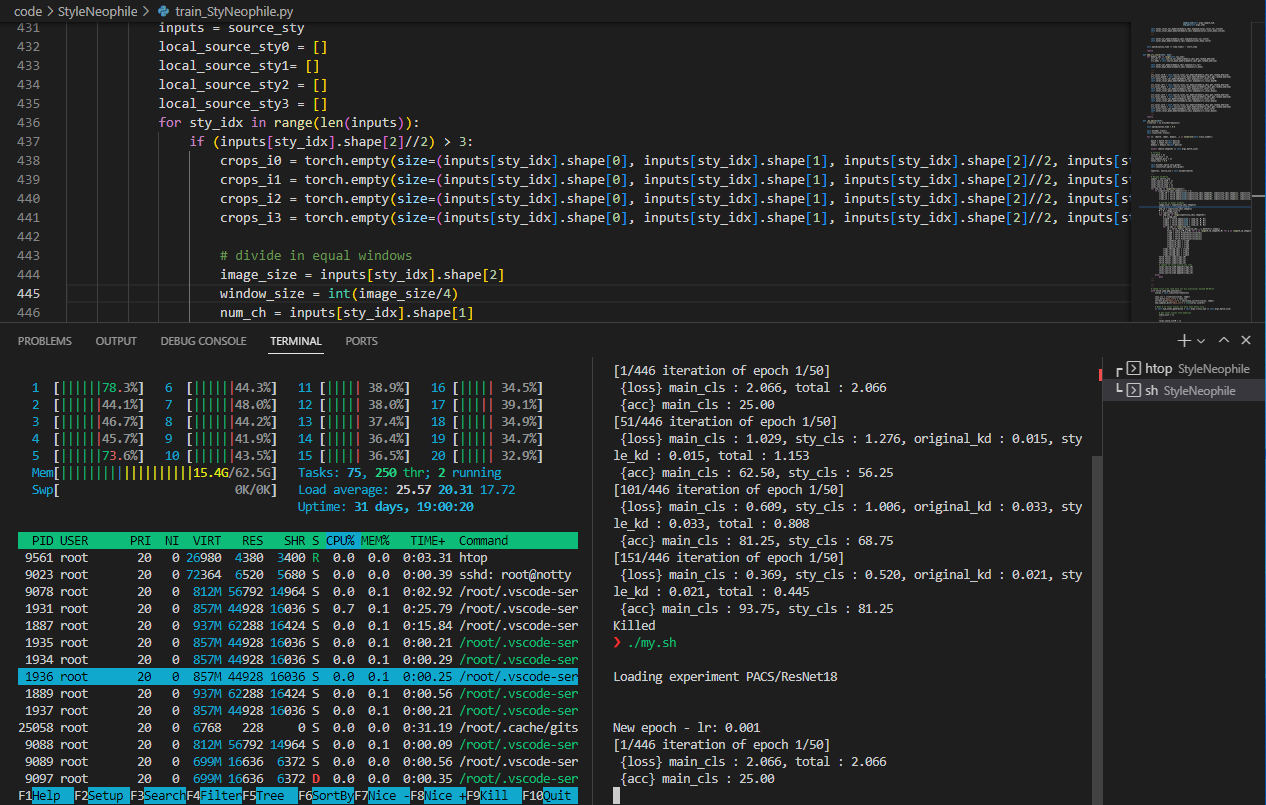

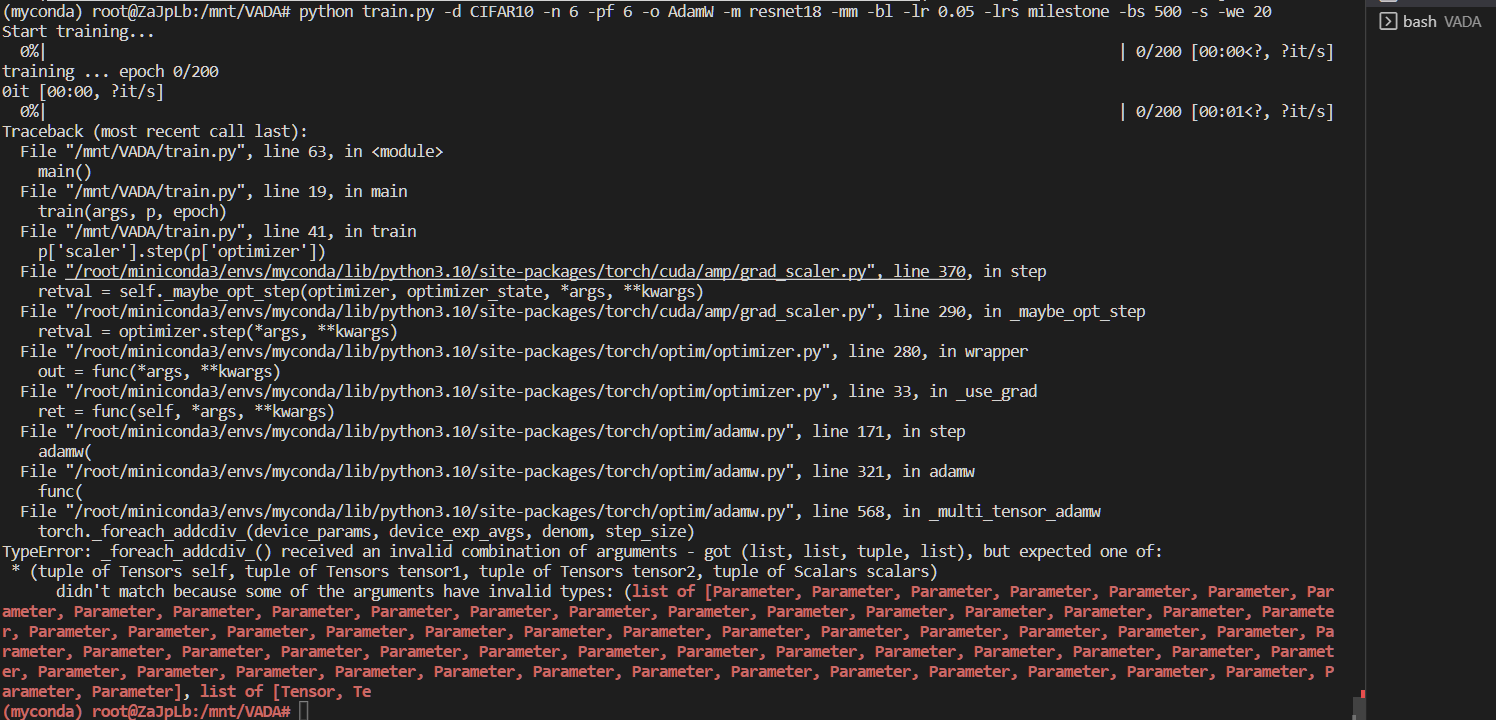

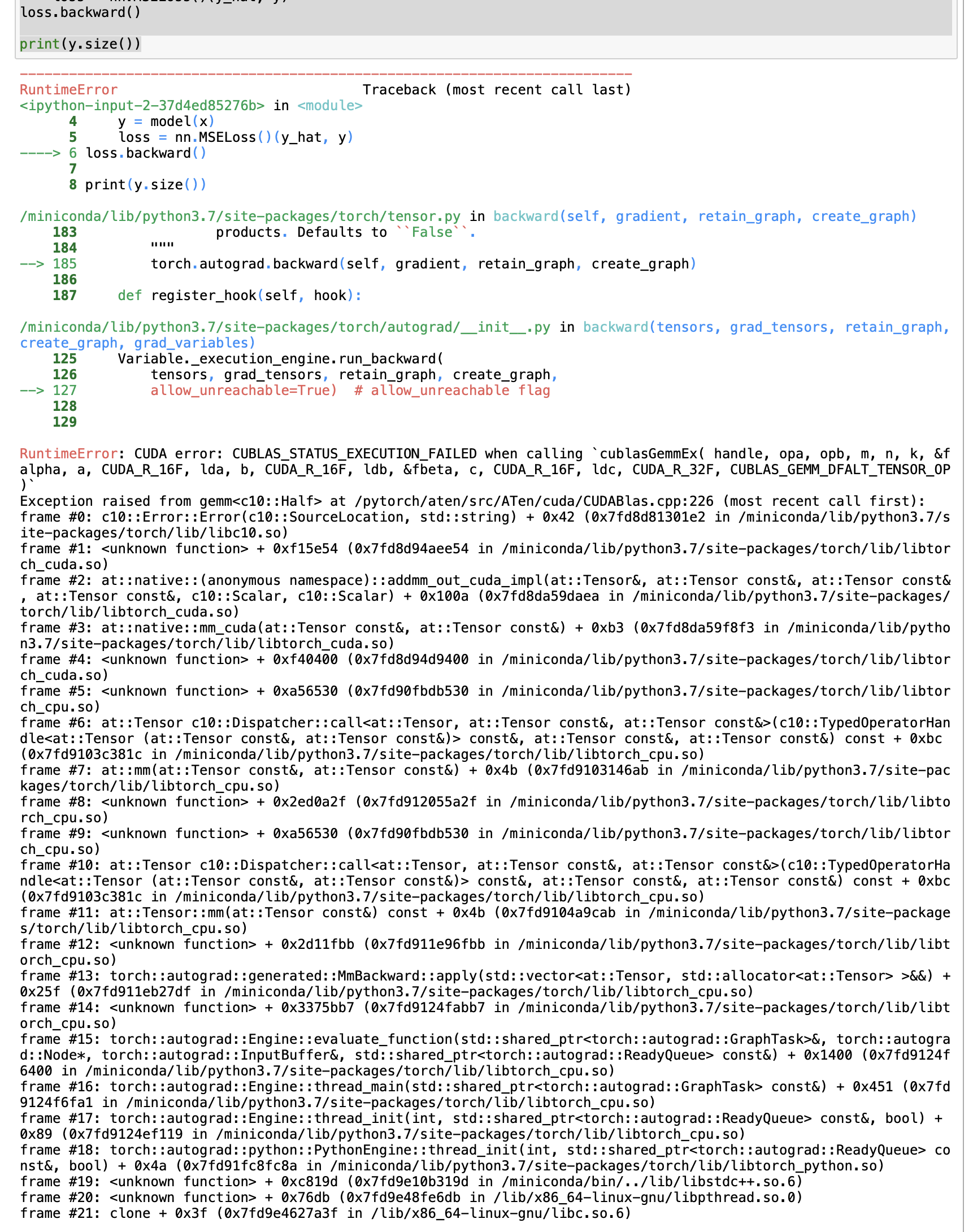

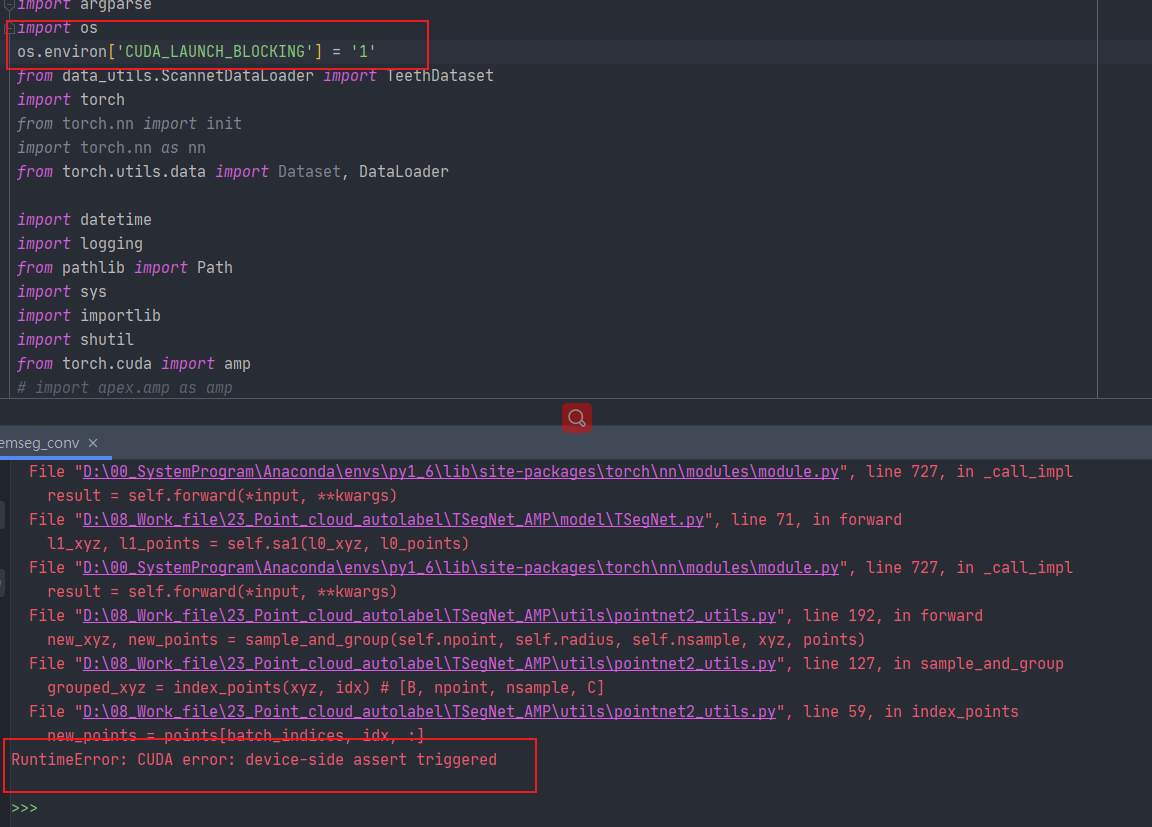

When I use amp for accelarate the model, i met the problem“RuntimeError: CUDA error: device-side assert triggered”? - mixed-precision - PyTorch Forums